There are startups building AI agents to defend you from cyber-attacks, and many in the industry agree AI will be effective in doing so. In fact, research from the Cloud Security Alliance notes that 63% of security professionals believe AI has the ‘potential to enhance security measures’.

But what happens when the companies offering artificial intelligence technology services are the ones to falter?

Several lawsuits have been filed after AI facial recognition products allegedly produced discriminatory outcomes, and generative AI companies have received lawsuits for allegedly training models on copyrighted material.

Recognizing the limitations of traditional Cyber and Technology Errors & Omissions policies, Relm Insurance developed NOVAAI — a specialized solution tailored for the unique liability and cybersecurity risks AI companies face. From violations of ever-evolving AI regulation to addressing claims of discrimination, NOVAAI is designed to meet the evolving demands of businesses in the AI sector.

Understanding the New AI Liability Risks for AI Developers

The rise of AI technology introduces risks that traditional insurance policies aren’t designed to cover. For companies offering AI-driven products or services, these challenges are both complex and pressing.

NOVAAI specifically addresses these risks — including, but not limited to, the below.

- AI technology liability: Claims and expenses arising from errors or failures in AI services or AI technology products sold, licensed or distributed to others.

- AI-generated content risks: Legal disputes over content created by AI tools, including copyright infringement or defamation claims.

- Regulatory violations: Costs tied to AI-specific regulations, such as EU AIA, adjacent rules, or emerging legislation governing AI practices, including fines where insurable by law.

- Bias and discrimination: Allegations that an AI system, such as a hiring tool or algorithm, produced discriminatory outcomes.

“AI liability isn’t just about new risks — it’s about how existing technological risks evolve in a way that traditional insurance needs to keep pace with,” explains Claire Davey, Relm’s Head of Product Innovation and Emerging Risk. “NOVAAI is designed to adapt to these changes, protecting companies as they navigate the challenges of operating in this dynamic environment.”

Real-World Exposure: When NOVAAI Matters

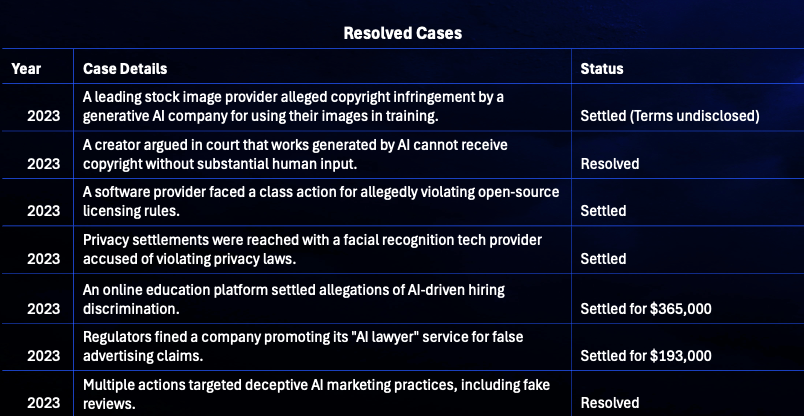

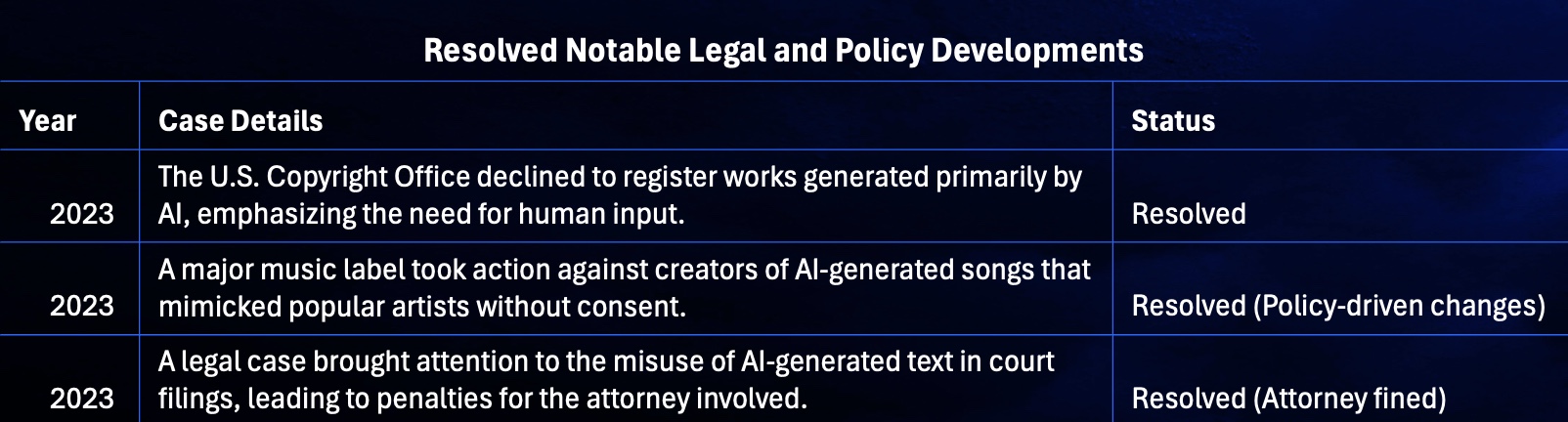

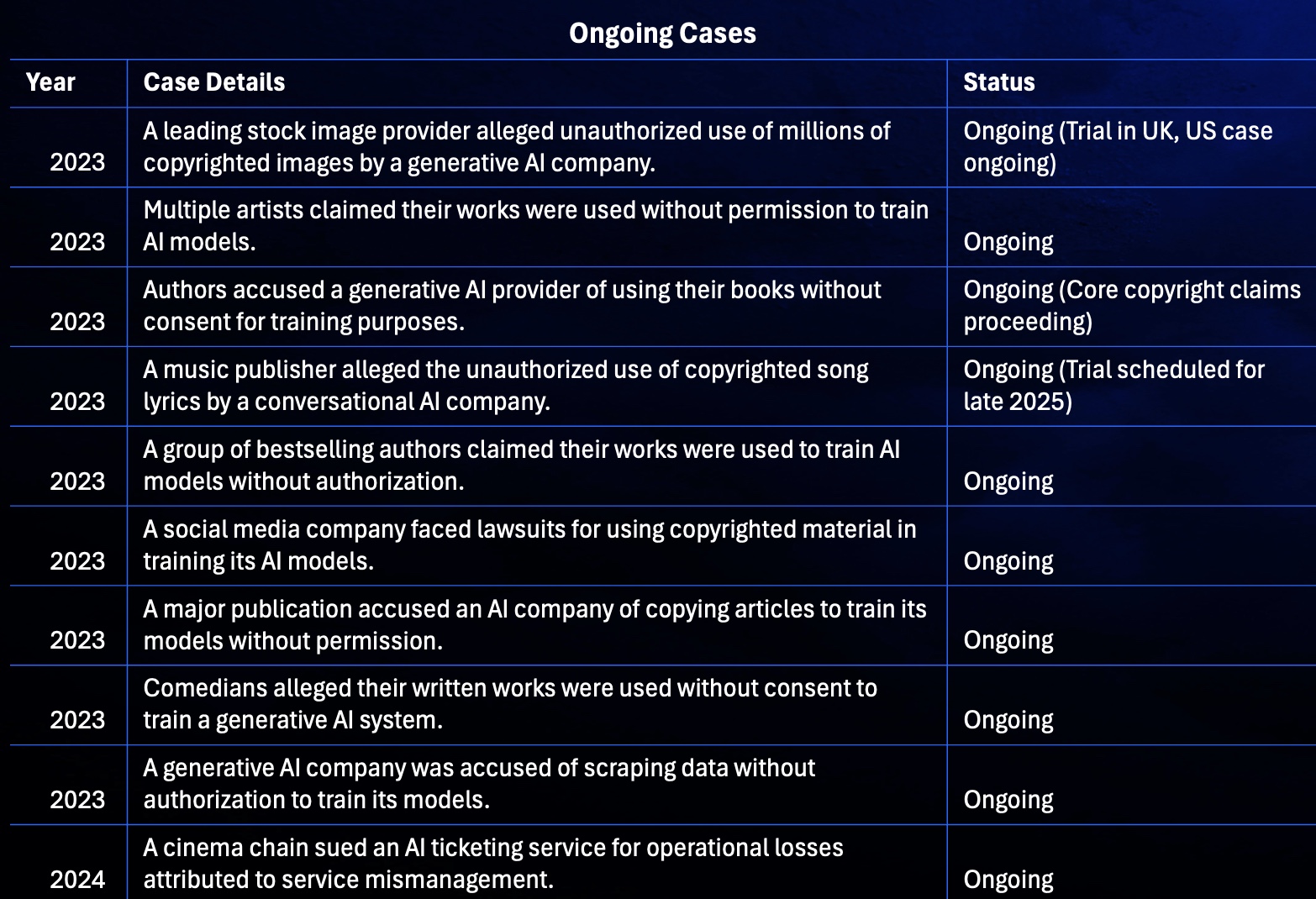

AI companies face an increasing number of liability scenarios as their products integrate into everyday life. From content generation tools to regulatory frameworks that are rapidly evolving, the need for specialized coverage like NOVAAI is more pressing than ever. Below are just some recent instances of the AI risk landscape:

These recent cases demonstrate the growing concern around AI and copyright infringement, which highlights the need for tailored insurance that covers such risks.

Broker AI Risk Scenario: Where NOVAAI Fits In

NOVAAI means that brokers can now secure coverage for their clients that keeps pace with AI operations, demonstrating an awareness of clients’ existing and future risk transfer needs — particularly when it comes to complex and emerging risks.

Recruitment SaaS

Consider an insurance broker working with a technology company that specializes in AI-driven talent acquisition platforms. The client’s software analyzes resumés, filters candidates, and even conducts automated interviews. While the system has been designed with the best intentions, regulators discover that its algorithms unintentionally discriminate against certain demographic groups, resulting in unfair hiring practices. This triggers an investigation under emerging AI regulations, such as the EU’s Artificial Intelligence Act and anti-discrimination laws in the U.S., potentially leading to hefty civil fines and legal claims.

Without proper coverage, the client faces significant financial exposure, including:

- Claim expenses for legal defense and settlements tied to the alleged discriminatory practices.

- Civil fines and penalties resulting from the breach of regulatory standards.

- Reputational damage as news of the noncompliance spreads, leading to decreased client trust and potential loss of business.

A standard Technology E&O policy wouldn’t explicitly cover fines or claims related to AI regulatory violations. However, Relm’s NOVAAI offers affirmative coverage for these scenarios, protecting the client from the financial consequences of noncompliance with AI regulations (where legally insurable). For the broker, introducing NOVAAI not only fills a critical gap in the client’s risk management strategy but also positions them as a forward-thinking advisor who understands the evolving regulatory landscape of AI technology.

By offering NOVAAI, brokers can help clients:

- Proactively address risks tied to regulatory noncompliance, ensuring they have the financial protection needed to navigate this uncharted legal territory.

- Build resilience as AI regulations become more stringent, particularly in regions like the EU, the U.S., and Asia-Pacific.

- Maintain their operational and reputational stability, even in the face of complex liability challenges.

Claims scenario #1: IP infringement

An e-gaming company, ‘GameX Studios’, develops an open-world RPG that allows users to create highly customizable avatars. To speed up development and enhance creativity, they use a generative AI model trained on vast datasets of existing character designs from popular games, movies, comics, and anime.

The AI unintentionally generates avatars that closely resemble copyrighted characters from franchises. Players notice that some AI-generated avatars have nearly identical features to well-known characters.

Because GameX Studios integrated these AI-generated avatars into their game’s customization options without ensuring originality, the rightsholders of these franchises claim copyright infringement, arguing:

- Derivative Works: The AI-generated avatars are unauthorized adaptations of copyrighted characters.

- Unauthorized Training Data: If the AI was trained on protected character designs without permission, it could be a case of copyright misuse.

- Commercial Exploitation: Since the game sells avatar skins and character customization options, it directly profits from potentially infringing designs.

The damages and defense costs that are incurred by GameX because of this lawsuit would be insurable under NOVAAI.

Claims scenario #2: Discrimination

A multinational tech company, ‘TechHire Inc.’, implements an AI-powered hiring tool to screen job applicants. The AI system, trained on past hiring data, predicts which candidates are most likely to succeed in the company and automatically shortlists them for interviews.

The AI starts favoring white candidates over equally qualified black applicants. After investigation, it is found that:

- The AI was trained on historical hiring data, which contained racial biases (e.g., past hiring managers typically hired white candidates in technical roles with traditional American-sounding names).

- The model assigned lower scores to resumes that listed historically black colleges and universities.

TechHire subsequently faces lawsuits under anti-discrimination laws, by those who were impacted — both TechHire’s clients and their candidates. They are also subjected to regulatory investigations because of the discrimination and failure to perform annual bias audits.

Claims scenario #3: Regulatory fines and penalties

A healthtech company, ‘MediAI Solutions’, develops an AI-powered diagnostic tool that assists doctors in detecting heart disease. Since medical AI systems impact health outcomes, they are classified as high-risk under the EU Artificial Intelligence Act (EU AIA) and must meet strict compliance requirements.

However, the AI was trained primarily on male patient data, leading to lower accuracy for female patients. As a result, women showing atypical heart attack symptoms (e.g., nausea, fatigue) are often misdiagnosed or ignored, increasing their risk of fatal complications. The system provides no clear explanation for its diagnoses, making it difficult for doctors or patients to challenge or verify AI-generated results.

Under the EU AI Act, breaches of high-risk AI obligations (e.g., lack of fairness, explainability, and human oversight) can result in fines up to €15 million or 3% of the company’s global annual turnover.

These regulatory fines and penalties are insurable under NOVAAI, where insurable at law, along with claims expenses incurred in responding to the regulatory investigation. Any damages and claim expenses arising from claims of negligence and breach of contract against ‘MediAI Solutions’ by healthcare institutions would also be covered.